Researchers at the University of Massachusetts and the Air Force Research Laboratory Information Directorate have recently created a 3-D computing circuit that could be used to map and implement complex machine learning algorithms, such convolutional neural networks (CNNs). This 3-D circuit, presented in a paper published in Nature Electronics, comprises eight layers of memristors; electrical components that regulate the electrical current flowing in a circuit and directly implement neural network weights in hardware.

Professors Qiangfei Xia and Jianhua (Joshua) Yang of the electrical and computer engineering (ECE) department have published yet another in a long series of papers in the family of “Nature” academic journals, this one in the latest issue of “Nature Electronics.” In this paper, the two ECE researchers and their research team described their construction and operation of a three-dimensional (3D) circuit composed of eight layers of integrated memristive devices in which the novel structure makes it possible to directly map and implement complex neural networks.

“Previously, we developed a very reliable memristive device that meets most requirements of in-memory computing for artificial neural networks, integrated the devices into large 2-D arrays and demonstrated a wide variety of machine intelligence applications,” Prof. Qiangfei Xia, one of the researchers who carried out the study, told TechXplore. “In our recent study, we decided to extend it to the third dimension, exploring the benefit of a rich connectivity in a 3-D neural network.”

Constructing a computing circuit in three dimensions (3D) is a necessary step to enable the massive connections and efficient communications required in complex neural networks. 3D circuits based on conventional complementary metal–oxide–semiconductor transistors are, however, difficult to build because of challenges involved in growing or stacking multilayer single-crystalline silicon channels.

Here we report a 3D circuit composed of eight layers of monolithically integrated memristive devices. The vertically aligned input and output electrodes in our 3D structure make it possible to directly map and implement complex neural networks. As a proof-of-concept demonstration, we programmed parallelly operated kernels into the 3D array, implemented a convolutional neural network and achieved software-comparable accuracy in recognizing handwritten digits from the Modified National Institute of Standard and Technology database. We also demonstrated the edge detection of moving objects in videos by applying groups of Prewitt filters in the 3D array to process pixels in parallel.

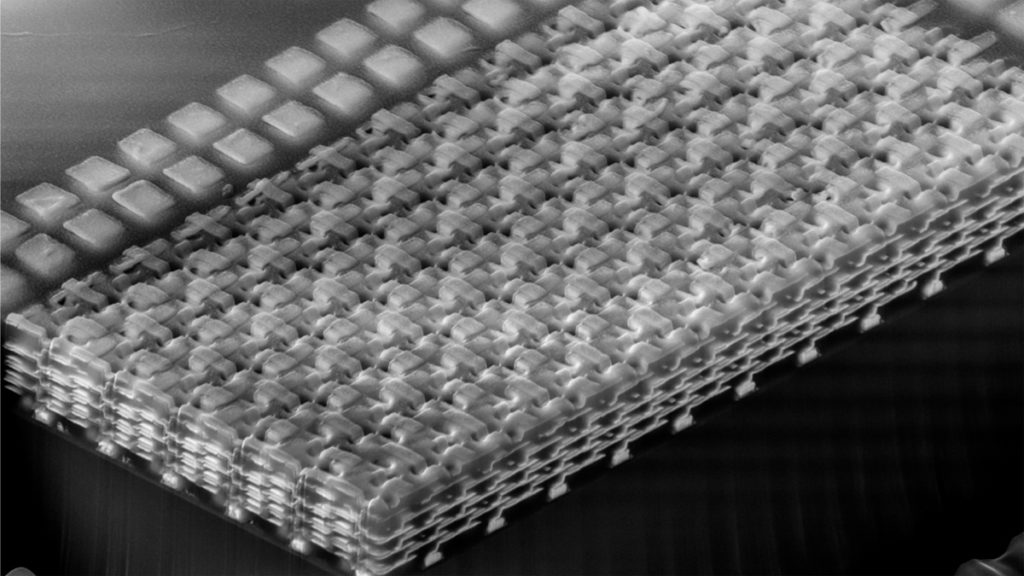

An image of part of the memristor array is highlighted on the cover of “Nature Electronics.” This is the second time that the same group of researchers landed on the cover of this journal (the first time was on the cover of this journal in Jan. 2018).

According to the website memristor.org, “Memristors are basically a fourth class of electrical circuit, joining the resistor, the capacitor, and the inductor, that exhibit their unique properties primarily at the nanoscale.” Among other advancements, this new research by Xia, Yang and their team represents a major step forward in using memristors for complex computing processes that closely imitate the connectivity of the human nervous system and brain.

As Xia and Yang said about their new paper, “It is about a 3D memristor neural network that was used for parallel image and video processing, moving one step closer to mimicking the complex connectivity in the brain.”

Building integrated circuits into the third dimension is a straightforward approach to address challenges in further shrinking down the device size – an essential way to improve the chip performance. With 3D integration, one can simply pack more devices without the need of aggressive scaling However, the key message from their Nature Electronics paper, according to the Xia and Yang, is to show that constructing a computing circuit in 3D is a necessary step to enable the massive connections and efficient communications required in complex neural networks. Furthermore, a 3D neural network would be able to take 2D inputs, a paradigm-shift and less resource-demanding approach than most current neural network topographies in which the inputs data have to be one dimensional vectors.

Nevertheless, “making a 3D chip is an extremely challenging task”, said Xia and Yang. “Putting one layer on top of the other, and to carry out the stacking process many times, requires delicate chip design and rigorous process control”.

Indeed, it took the first author of the paper, Mr. Peng Lin a few years to build this 3D circuit that is a major part of his Ph.D. work. Dr. Lin graduated from UMass ECE department in September 2017. He is now a postdoctoral associate at the Massachusetts Institute of Technology, leading the neuromorphic computing efforts in a research group.

As background to this feat, Xia and Yang explained that deep neural networks are computationally heavy models with a huge number of functional and complex connections. Data flows in such networks are translated into massive physical instructions that run in digital computers, a process which consumes tremendous computing resources and imposes a performance ceiling due to the need to shuttle data between the processing and memory units.

However, according to Xia and Yang, emerging non-volatile devices, such as memristors, can directly implement synaptic weights and efficiently carry out resource-hungry matrix operations inside ensemble arrays.

“Moreover,” said Xia and Yang, “neural networks could be fully placed in memristor-based systems and executed on site, transforming the computing paradigms from high-demand, sequential, digital operations into an activation-driven, parallel, analogue-computing network.”

But there’s still a crucial problem with memristors used in this context. Existing memristor-based systems are built on regular, two-dimensional (2D), crossbar arrays. Their simplified connections cannot efficiently implement the full topology of often more complex structures in deep neural networks.

Ultimately, it is the connections between neurons (which generate the emergent functional properties and states) that define the capability of a neural network. Therefore, a fundamental shift in architecture design to emphasize the functional connectivity of device arrays is required to unleash the full potential of neuromorphic computing.

“In this article,” as Xia and Yang wrote, “we show that extending the array design into 3D enables a large number of functional connections, allowing a complex, memristor, neural network to be built. Using the convolutional neural network as an example, we built an eight-layer, prototype, 3D array with purposely-designed connections that are able to perform parallel convolutional kernel operations in the convolutional neural network.

Xia and Yang added that “The 3D design provides a high degree of functional complexity, which goes beyond the purpose of increasing packing density in conventional 3D architectures and opens a critical avenue to using memristors for neuromorphic computing.”

In this case, neuromorphic computing means computing that mimics the neuro-biological architectures present in the human nervous system.

As an example of the continuing success of Xia and Yang publishing in the prestigious scientific journals, they published around 30 papers in “Nature” and “Science” research journals in the last few years.