Researchers from China and Japan proposed a “lightweight and multi-scale model for MLCC appearance defect detection” in their work published by Optics & Laser Technology Journal.

Introduction

Multilayer ceramic capacitors (MLCCs) are critical components in electronics manufacturing, where surface appearance defects can compromise product reliability and yield.

Detecting these defects is challenging because they vary widely in shape and size, often have unclear boundaries, and must be found quickly to meet real-time production demands.

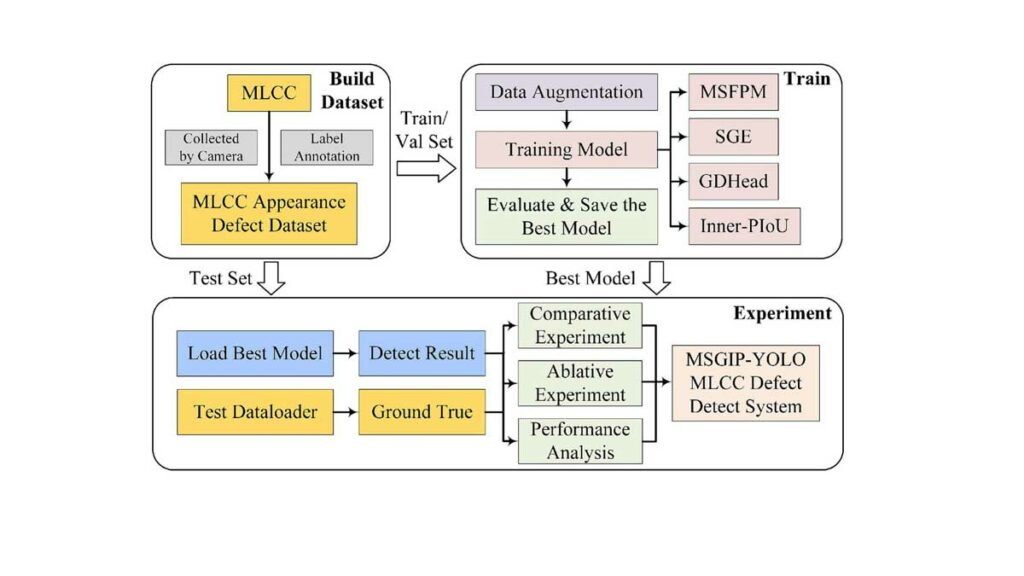

The article introduces a new defect inspection vision system and a lightweight, multi-scale deep learning model designed to improve accuracy and speed in MLCC defect detection, addressing the limitations of existing approaches in industrial environments.

Key points

- Problem focus: MLCC defect detection is hindered by irregular shapes, scale variation, fuzzy boundaries, and the need for rapid inference in production settings.

- System and model: A defect inspection vision system (DIVS) and a lightweight model named MSFPM-SGE-GDHead-InnerPIoU-YOLO (MSGIP-YOLO) are proposed to tackle multi-scale detection efficiently.

- Multi-scale fusion: The Multi-Scale Fusion Pyramid Module (MSFPM) enables robust multi-scale feature aggregation, enhancing detection across different defect sizes.

- Spatial enhancement: Spatial Group-wise Enhance (SGE) optimizes spatial feature distributions, improving cross-scale consistency and localization.

- Lightweight head: The Group Decoupled Head (GDHead) reduces redundant channels to lower parameters without sacrificing accuracy, improving speed.

- Loss function: Inner-PIoU replaces the standard loss to boost generalization across scales and accelerate convergence.

- Performance: On the MLCC dataset, the model achieves 93.4% mAP, 89.9% F1, 3.77M parameters, and 150 FPS inference, demonstrating real-time viability.

Extended summary

The article targets core challenges in MLCC appearance defect detection—most notably the high variance in defect geometry and scale, blurred or irregular edges, and stringent speed requirements on production lines. Traditional detectors often struggle with these conditions because their feature pyramids and heads are either too heavy for real-time use or insufficiently expressive for fine-grained, multi-scale localization. To bridge this gap, the authors construct an integrated defect inspection vision system (DIVS) and present a tailored model, MSGIP-YOLO, that balances multi-scale accuracy with inference efficiency.

Central to the approach is the Multi-Scale Fusion Pyramid Module (MSFPM), designed to aggregate features across scales more effectively than standard pyramids. By prioritizing efficient fusion, MSFPM strengthens the representation of both small and large defects while maintaining a compact architecture. Complementing this, Spatial Group-wise Enhance (SGE) refines spatial feature distributions, ensuring that salient defect cues are preserved and enhanced across scales, which is especially useful for defects with unclear boundaries where subtle spatial patterns determine correct localization.

To further streamline inference, the Group Decoupled Head (GDHead) rethinks the detection head architecture by minimizing redundant channels and decoupling tasks to reduce parameter count without degrading accuracy. This design maintains precision while enabling high frame rates suitable for in-line inspection. Additionally, the standard localization loss is replaced with Inner-PIoU, which encourages more robust box regression under scale variation. This loss formulation improves generalization to defects of diverse sizes and speeds up training convergence, leading to more reliable detection under real-world variability.

The experimental validation on an MLCC-specific dataset demonstrates the practical impact of these choices. MSGIP-YOLO achieves 93.4% mean average precision (mAP) and an 89.9% F1 score, indicating strong accuracy and balanced precision-recall performance. With just 3.77 million parameters and an inference speed of 150 frames per second (FPS), the model is sufficiently lightweight for deployment in industrial scenarios where real-time throughput and low latency are critical. These metrics underscore the model’s ability to deliver both speed and robustness, overcoming common barriers in MLCC defect detection such as fuzzy boundaries and irregular shapes.

The article positions MSGIP-YOLO as a practical, deployable solution for modern electronics manufacturing lines. By combining MSFPM for multi-scale feature fusion, SGE for spatial refinement, GDHead for parameter efficiency, and Inner-PIoU for improved regression behavior, the system aligns with industrial constraints while delivering improved detection quality. The dataset results suggest that the architecture is not merely theoretically appealing but also operationally effective within a defect inspection vision system, highlighting its potential for broader use in appearance inspection tasks beyond MLCCs.

Conclusion

MSGIP-YOLO advances MLCC appearance defect detection through targeted architectural innovations that improve multi-scale sensitivity and inference speed. With strong empirical performance and a compact design, it meets the demands of real-time industrial inspection. The combination of MSFPM, SGE, GDHead, and Inner-PIoU offers a cohesive framework that addresses scale variation, spatial clarity, and efficiency—positioning the approach as a promising, practical solution for high-throughput quality assurance in electronics manufacturing.

Read the complete paper:

Junwei Zheng, Xiuhua Cao, Dawei Zhang, Kiyoshi Takamasu, Meiyun Chen,

A lightweight and multi-scale model for MLCC appearance defect detection,

Optics & Laser Technology, Volume 193, Part A, 2026, 114173, ISSN 0030-3992,

https://doi.org/10.1016/j.optlastec.2025.114173.

(https://www.sciencedirect.com/science/article/pii/S0030399225017645)