Source: ECN article

Each New Year invites a host of achievements, inventions, and technological advancements. As we enter the early months of another year, industry experts share their opinions on what’s ahead for tech and engineering in 2018.

By Peter Lafreniere, Cable Product Manager, AFC Cable Systems

Daylight Harvesting

Building energy code requirements are growing stricter each year and there is a growing demand on commercial and institutional spaces to reduce power and energy costs. Daylight harvesting is one way to reduce energy consumption by using daylight in place of artificial light to illuminate a space. With daylight harvesting, lighting control systems are used to dim lights proportionally to the availability of natural light.

The most aggressive state to utilize daylight harvesting in order to meet code mandates has been California, but many other states are seeing the payoff in both energy savings and reduced power costs. Making dimmable fixtures a reality are advancements in LED lighting, which are now almost always supplied with dimmable drivers. This makes LED an easy and inexpensive option compared to dimmable fluorescent ballasts.

Tunable Lighting

Another emerging trend in the lighting industry due to the growing sophistication of LED is “tunable lighting.” Tunable lighting is science-supported technology in which shifts in color temperatures and light levels mimic the human circadian rhythm. Basing the intensity and color of light on the circadian rhythm draws a closer connection to nature, an element that is often missing from indoor settings. This is especially important technology for offices, schools, and healthcare facilities; it has been shown that people can heal faster or have a higher level of concentration when the color of light is adjusted to be more natural.

Prefabricated Wiring

Most industrial sectors have seen a decline in skilled tradesmen. For every three electricians that retire, there is only one taking their place. The industry has responded to this by developing technology that allows for faster installation. Prefabricated wiring solutions are manufactured in a controlled environment, with the required certifications and listings, requiring less work to be done in the field. This allows for an easier and faster installation so the technician can move on to the next job.

By Jim Beneke, Vice President, Engineering and Technology, Avnet

Since the introduction of the world’s first microcontroller in 1974, billions of these tiny powerhouses have been deployed in every kind of electronic device or system. Moving from four-bit machines, to eight-bits, then 16-bits, and now the default 32-bits, these mighty wonders have morphed into monsters of complexity and capability, with progressive improvements in functional integration, power reduction, performance, and cost. Given this continued evolution of MCU technology, I’m seeing a “rebirth” of the microcontroller.

MCU development cycles have been increasing and software complexity has grown in kind. The move by Arm, Ltd. to split the technology into two distinct categories—A-class Application processors and M-class Microcontroller processors set the stage for the “rebirth” that we are witnessing today. With this split came a divide in software and how it was targeted at these two different processor classes. Application processors gained the support of more robust and commercialized operating systems, namely Linux, Windows, and Android. With the growth of the Internet of Things (IoT), embedded systems based on application processors could leverage the wealth of drivers, libraries, and stacks that these operating systems provided. This resulted in easier, quicker, and more secure implementation of IoT solutions that targeted application processors.

This isn’t to say that microcontrollers haven’t found a niche in IoT solutions as well. However, the difficulty in implementing the various connectivity protocols, communication stacks, and security features using available microcontroller operating systems or bare metal code, became increasingly challenging for designers. The solution to this technical dilemma has come in the form of more packaged open-source operating systems targeting microcontrollers, such as the Mbed OS developed by Arm, which specifically targets its 32-bit Cortex-M microcontrollers cores. The recent announcement from Amazon Web Services (AWS) pronouncing their support of an enhanced FreeRTOS that includes built-in connectivity capabilities to AWS IoT cloud services, seems to signal that the momentum of this OS solution is building.

An added benefit of this approach is the more robust security that operating systems like Windows and Linux can bring to microcontrollers in IoT-enabled systems. The ability to bolster security with microcontrollers will fuel a continued growth of microcontroller adoption in the IoT, breathing new life and strategic value into the microcontroller market for years to come.

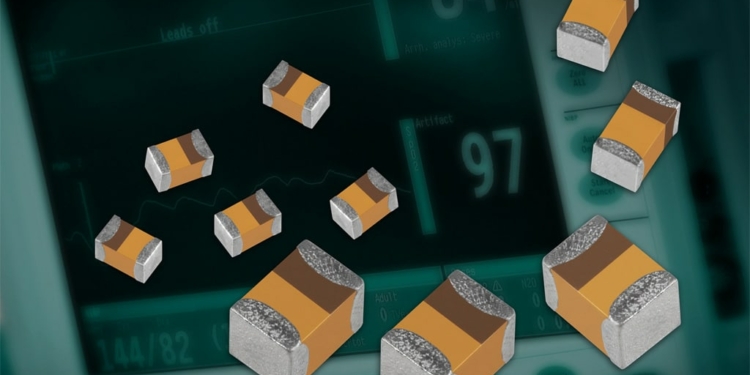

By Kevin Walker, Business Development Manager, Medical, AVX Corporation

In 2018, the designers and manufacturers of cutting-edge autonomous vehicles, handheld computing/communication devices, and wearable health monitors will adopt more component technologies and product design methodologies from critical, high-reliability, military and aerospace systems, and ultra-reliable implantable medical devices. The characteristics of these critical systems—especially their higher power efficiency and extremely consistent system performance, all in ever-smaller form factors—are fast becoming cross-market requirements.

Featured Figure: AVX’s T4C Microchip Medical Series delivers the smallest medical tantalum capacitors available in the industry (0402 case sizes) with low DC leakage levels (0.01 CV or 0.3 μA), in addition to change control for consistent supply and high standard reliability better than 0.1 percent failures per 1,000 hours, which is 10 times better than standard commercial reliability. Ideal applications include: filtering, hold-up, timing, pulsing circuits within implantable, non-life-support, and non-implantable life support applications, but could easily extend to critical circuits in other markets.

Historically, the number one goal for manufacturers in the critical systems market was to ensure the utmost reliability by any means possible, including: conservative design rules, rigorous performance testing, and stringent screening, even if they weren’t necessarily cost-effective. The commercial products market has historically been nearly the opposite, with low cost prioritized over best quality, resulting in looser performance specifications. Today, with the newest mobile phones breaking the $1,000 barrier and the demand for safe, self-driving vehicles just over the horizon, consumers are ratcheting up their expectations regarding product capabilities, performance, and safety, largely in order to justify the cost.

The big hurdle for manufacturers will be to efficiently manufacture components and systems with the lowest possible total cost. For stakeholders, it will be employing value stream mapping and right-shoring to help optimize business processes and intelligently evaluate the total applied cost of ownership of any component, rather than sub-optimizing on purchased price, which is the historical tendency. For instance, depending on system performance expectations, an SMD capacitor that has substantially lower leakage, a tiny fraction of the commercially accepted level of defects, and a much longer predicted lifetime than a standard capacitor often has a lower total applied cost even though it has a slightly higher component cost.

In order to serve both sets of market expectations while continuing to thrive, manufacturers will have to embrace intelligent product design practices and product lifecycle management concepts, including:

Design for Excellence (DFX) methodologies that focus on designing for quality, reliability, and manufacturability, amongst other factors, to optimize both performance and cost. For instance, designing components for less cost-sensitive applications more conservatively so performance levels and life cycle expectations are achieved with greater ease.

Maverick Lot Detection, which removes manufactured lots that are not necessarily out of specification, but perform somewhat differently as early as possible in the manufacturing sequence to minimize production costs and improve final product quality and yield. For proper implementation, this process requires a rigorous statistical approach, data-driven decision-making, and an intimate understanding of product behavior.

Batch Reliability Grading, a critical component of comprehensive maverick lot detection programs that employs accelerated test methods, such as Weibull analysis, to help ensure consistent batch-to-batch performance and eliminate premature product mortality.

By Kevin Morgan, CMO, Clearfield and Chair-Elect, Fiber Broadband Association

By definition, the future is only what we can predict. When asked about the role of technology, the American businessman, investor, and philanthropist Steve Ballmer responded, “The number one benefit of information technology is that it empowers people to do what they want to do. It lets people be creative. It lets people be productive. It lets people learn things they didn’t think they could learn before, and so in a sense it is all about potential.” While the role of technology has profoundly impacted everyone, Ballmer’s words still ring true that we have yet to reach our potential. Individuals and corporations alike deal with the constantly expanding availability of technology. In 2018, the technologies that will have the most profound impact are the confluence of 5G and fiber.

Today’s telecommunications market is experiencing an insatiable need for bandwidth to satisfy new applications. Bandwidth is defined as the rate information is delivered from one machine to another. The most popular bandwidth rate in the news today is gigabit (signals are exchanged one billion times per second). That may seem like a lot until you consider that starting in 2018, 10 Gig is the target bandwidth. Here’s why.

Gigabit services were deployed directly to consumers and businesses using fiber as a wireline connection starting in 2009. Numerous studies have documented the linkage between economic growth and the availability of gigabit services. In short, gigabit services change lives.

Wireless networks also use gigabit. Gigabit became part of the fabric of the 4G LTE infrastructure. However, in 2018, these wireless networks will start demanding 10 Gig as the de facto data rate. 5G is the next step in the wireless evolution and is based on 10 Gig connections. 5G is expected to support a huge boom in mobile connectivity. By 2021, the world will have about 27.1 billion networked devices, up 58.47 percent from the 17.1 billion in 2016.

So, how will we reach our potential given all of this technology? I believe the answer is dependent on the speed at which we deploy fiber networks. We are seeing that more fibers are being deployed quicker as deployment techniques improve through innovative, field tested solutions designed to reduce the high costs associated with fiber deployment, management, protection, and scaling a fiber optic network.

2018 will be the year 5G and fiber come together to let people reach their potential.

By John D’Ambrosia, Chairman of the Board, Ethernet Alliance

On December 6, 2017 the ratification of the IEEE 802.3 Ethernet standard, IEEE 802.3bstm-2017, introduced Ethernet operation at 200 Gb/s and 400 Gb/s. To achieve 200 Gb/s and 400 Gb/s data rates, multiple lanes at either effective 50 Gb/s or 100 Gb/s signaling rates were bonded together. The modulation used, both electrically and optically, was PAM4. This modulation scheme, compared to NRZ signaling, effectively doubles the number of bits transmitted with each symbol, as illustrated in Figure 1.

Figure 1: NRZ and PAM4 signaling comparison.

With the ratification of the 200 GbE and 400 GbE, PAM4 signaling will expand quickly in the engineering mainstream, especially given that one of the leading application spaces is the hyperscale data centers, which are under continuous pressure to support ever expanding demand. From the copper traces connecting integrated devices and optical modules to the optical links operating over single-mode fiber (SMF), the engineering community will be pressured to quickly educate itself to design, build, test, and deploy these solutions.

This is just the start, however, given the IEEE P802.3cd project, which is leveraging the 50 Gb/s lane rates as it introduces 50 GbE, as well as new physical layer specifications for 100 GbE and 200 GbE for backplanes, copper cables, and multi-mode fiber, while introducing a single lane 100 GbE 500 m SMF solution. Furthermore, it is anticipated that newly formed IEEE 802.3 study groups (Beyond 10 km Optical PHYs, 100 Gb/s per Lane Electrical, and Next-generation 200 Gb/s and 400 Gb/s MMF PHYs) will all consider objectives that can be met with 50 Gb/s or 100 Gb/s PAM4 solutions.

NRZ signaling has had a long run as the dominant signaling technology within the Ethernet community. Over Ethernet’s long history, it has been used in multiple electrical and optical specifications, starting at 10 Mb/s all the way up to 25 Gb/s. However, there is a new sheriff in town, so the industry should be prepared for a 2018 where every vendor will be showcasing their PAM4 products or systems. Furthermore, given its prominent role in Ethernet, we can expect industry research and refinement, as it moves into wide scale deployment.